Introduction

In laboratory medicine, several studies have described the most frequent errors in the different phases of the total testing process (TTP) (1-12), and a large proportion of these errors occur in the pre-analytical phase (2,5,13-17).

The first step in improving the quality of the pre-analytical phase is to describe potential errors and to try to estimate which errors are most dangerous for the outcome of the patient (13,18-22). Existing pre-analytical procedures should be compared to existing recommendations and thereafter improved to minimize the risk of errors. In addition, the frequency of errors should be recorded on a regular basis to detect improvement or deterioration over time, and further to explore if procedures should be changed.

Schemes for recording of errors and subsequent feedback to the participants have been conducted for decades concerning the analytical phase by External Quality Assessment (EQA) organizations operating in most countries. It is reasonable that these organizations also take upon them to set up EQA Schemes (EQAS) for the pre-analytical phase. At present, however, most EQA organizations do not offer such schemes. An important challenge, when developing EQAS for the extra-analytical phases, is the variety of locations and staff groups involved in the total testing process, of which several are outside the laboratory’s direct control. Test ordering, data entry, specimen collection/handling and interpretation of results often involve other than laboratory staff. Some of the pre-analytical schemes, e.g. schemes on test ordering, could also involve other health care professionals. The contact and communication between the clinicians/nurses and the laboratory staff is often limited (7,23). The ISO 15189: 2012 states that “External quality assessment programs should, as far as possible, provide clinically relevant challenges that mimic patient samples and have the effect of checking the entire examination process, including pre- and post-examination procedures” (item 5.6.4) (24). To our knowledge, and in contrast to what is required for the analytical phase, accreditation bodies do not ask laboratories for results from EQAS regarding the pre-analytical phase. If this is changed, it will be a driver for the EQA organizations to set up such schemes, and for the participants to use them. Consequently, a joint effort between EQA organizations, accreditation bodies and clinical laboratories seems necessary to implement pre-analytical EQA schemes. The aim of this paper is to present an overview of different types of EQA schemes for the pre-analytical phase and to give examples of existing schemes.

How to perform a pre-analytical EQA scheme?

Fortunately, EQAS for the pre-analytical phase are increasingly being developed, and roughly three different methods have been conducted:

Type I: Registration of procedures: Circulation of questionnaires asking about procedures on the handling of certain aspects of the pre-analytical phase, e.g. how “sample stability” or tube filling are dealt with, which criteria are used for sample rejection, and how these issues are communicated to the requesting physicians.

Type II: Circulation of samples simulating errors: For example distribution of real samples with matrixes or samples with contamination which might interfere with the measurement procedures. This is similar to usual analytical EQAS, since a sample is sent to the laboratories. Case histories can be sent together with the sample to elucidate how these samples are dealt with, and how the results are communicated to the physicians.

Type III: Registration of errors/adverse events: The incidences of certain types of pre-analytical errors (some of them could be quality indicators (QIs)) are registered and reported by the laboratories to the EQA organizer.

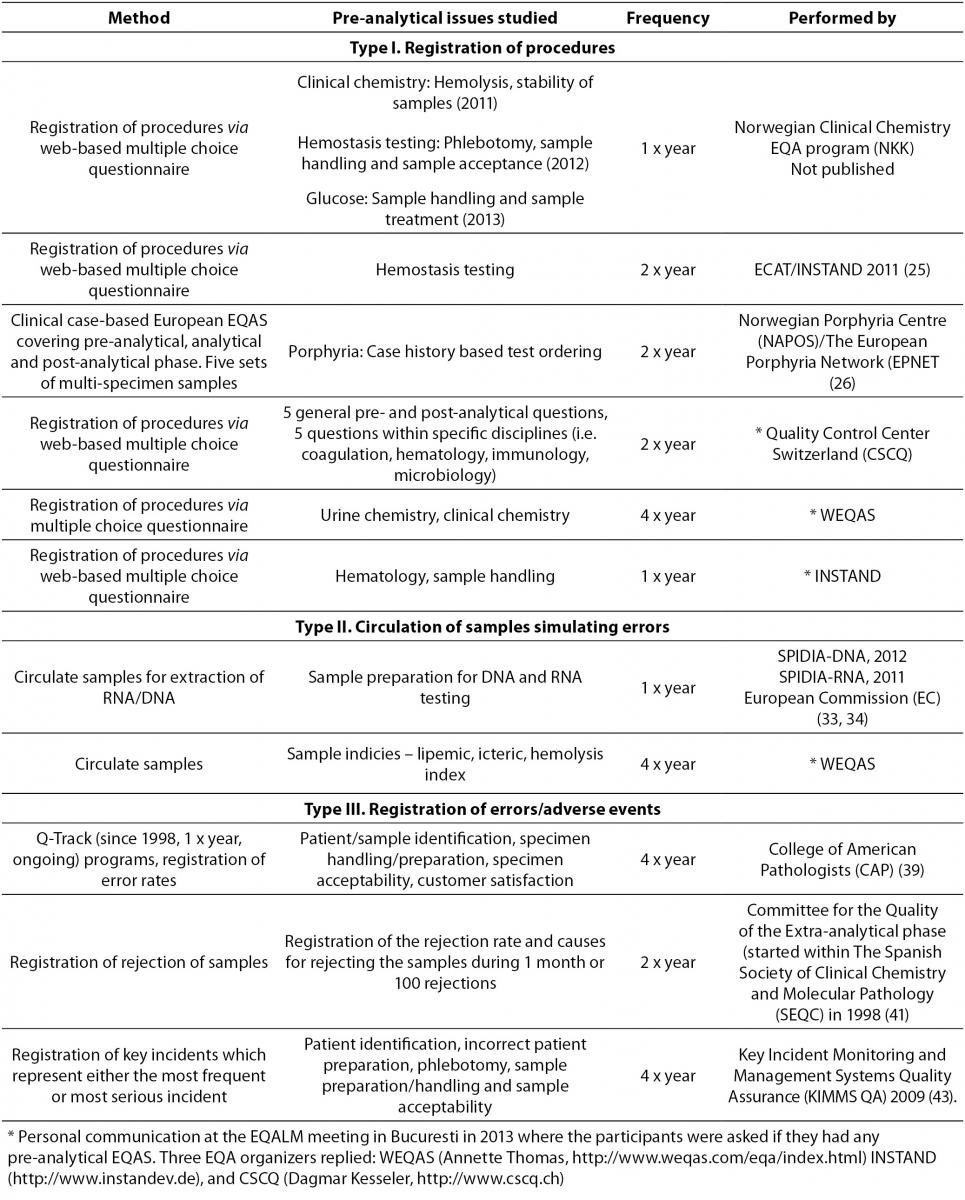

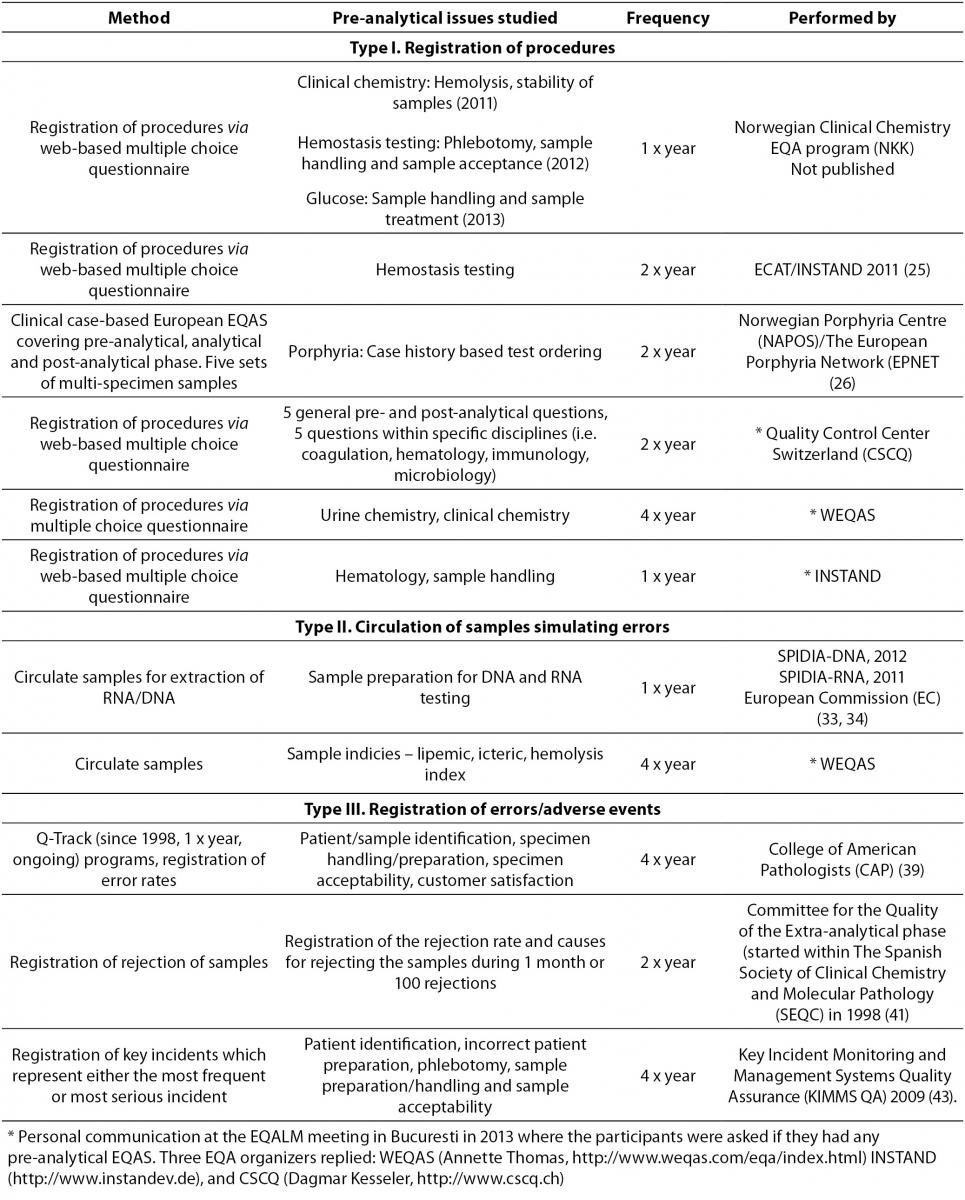

The EQA organizer should as usual provide feedback reports for the participating laboratories, enabling the laboratories to compare their results with the other participants. In addition, the feedback reports should include advice on how to minimize errors. In case of error reporting (Type III), the EQA organizer should also lead the harmonization of QIs for a valid comparison of error rates between different locations. Examples of the three different methods to conduct pre-analytical EQAS will be given below. Ongoing EQAS are summarized in Table 1.

Table 1. Examples of ongoing pre-analytical EQAS.

Type I: Registration of procedures

Identification and registration of pre-analytical procedures can be performed by circulating questionnaires, with open or multiple choice questions. Case histories to illustrate the potential consequences of pre-analytical errors for the patients may be included. A clear advantage of this type of EQAS is the limited resources needed for distributing and completing the scheme. Several aspects of the pre-analytical phase can be covered, and the questionnaire may potentially reach laboratories all over the world in a short time using an electronic contact and report form.

The Norwegian EQA program (NKK) has developed a pre-analytical EQA scheme which aims to identify especially problematic pre-analytical issues related to clinical chemistry analyses and hemostasis testing. This scheme is carried out by circulating web-based questionnaires (mostly multiple choice questions) to the Norwegian laboratories once a year dealing with different aspects of the pre-analytical phase. The laboratories receive a feedback report after each survey, showing their own results, the overall results and an overview of the relevant recommended procedures from guidelines and recent studies. The three surveys performed so far (2011, 2012 and 2013) (Table 1), concluded that there is a large variability in several pre-analytical practices and considerable room for improvement.

In 2009, the Croatian Chamber of Medical Biochemists (CCMB) performed a survey in Croatian laboratories to investigate appropriateness of procedures in the extra-analytical phases and to detect procedures most prone to errors of potentially clinical importance (27). A multiple choice questionnaire including pre-analytical conditions and criteria for sample acceptance and procedures of phlebotomy were circulated to members of CCMB. The study concluded that there is an urgent need for improving activities by appropriate recording and monitoring of the extra-analytical phases; emphasizing the importance of initiating pre- and post-analytical EQAS.

Labquality, the Finnish EQA organization, are about to start pre-analytical EQAS in 2014. The program will include case studies and multiple choice questionnaires concerning phlebotomy practices, pre-analytical procedures for clinical chemistry, microbiology and for blood gas analyzers (Personal information from Jonna Pelanti, Labquality, FI).

Challenges regarding registration of procedures as pre-analytical EQAS

To avoid misinterpretations, the questionnaires used in EQAS should be validated by experts in the field and/or in pilot studies to a few laboratories. This is especially important if the questionnaires are circulated in different countries and not translated into local languages. The questions should be designed to stimulate the participants to answer how the procedures really are performed, and not how they ideally should be performed (e.g. ask for written procedures). Providers of such schemes should also be aware that queuing of answers (multiple choices) might influence the results. Standardized follow-up of the surveys should be performed by offering advises to the participants on how to improve their procedures.

Type II: Circulation of samples simulating errors

EQAS for the pre-analytical phase can be performed using real samples with a matrix potentially interfering with the measurement procedures, as for example hemolysed, lipemic, or icteric samples or samples containing drugs known to interfere. Another example is to send the wrong sample material (e.g. serum instead of plasma). This type is in some instances comparable to analytical EQAS, since a sample is sent to the laboratories for measurement of pre-defined analytes. As for analytical EQAS, case histories can be included to elucidate which pre-analytical procedures are performed and how the results (or lack of results in case of sample rejection) are communicated to the physicians.

The feedback reports for these surveys are comparable to feedback reports for regular EQA surveys, and should include a comparison of the laboratory’s results to the results of all participants in addition to an overview of existing guidelines/recommendations and recent publications.

Surveys focusing on problematic areas within the pre-analytical phase may not be suitable for regular schemes, but can be conducted as specialized pre-analytical EQAS performed once (or a couple of times). In this way, the EQAS organizer can choose to study the most relevant issue present. The Nordic committee for External Quality Assurance Programmes in Laboratory Medicine (NQLM) carried out four different EQA interference surveys between 1999 and 2002 (28). The aim was to assess the effects of hemolysed, icteric and lipemic samples on some common clinical chemistry serum analyses. During 2014, a similar EQA survey will be performed in the Nordic countries to receive updated information on analytical performance and handling of hemolysed samples.

One of the major goals of the newly started European Commission funded project SPIDIA (Standardization and improvement of generic pre-analytical tools and procedures for in-vitro diagnostics) has been to develop evidence-based guidelines for the pre-analytical phase of blood samples used for molecular testing (29). The SPIDIA project has resulted in two pan-European EQAS focusing on the pre-analytical phase of DNA and RNA-based analyses (30,31) (Table 1). Blood samples were sent to the laboratories which performed DNA/RNA extraction of the material, the extracted material was returned, and the EQA organizer assessed the quality. The results of these surveys will provide the basis for performing new pan-European EQAS and further provide the basis for implementation of evidence-based guidelines for the pre-analytical phase of DNA and RNA analyses (29).

Challenges regarding the use of real samples in pre-analytical EQAS

The requirements for samples to be used in pre-analytical EQAS are similar to the requirements for samples in analytical EQAS. The samples should be similar to native patient samples containing the actual interferences, and should be homogenous and stable during the survey period. Additionally, they should reflect actual poor pre-analytical conditions like incorrect blood sampling and sample errors due to using tubes containing incorrect additives (e.g. serum or EDTA when citrated plasma is required) or inappropriate specimen preparation, centrifugation, aliquoting, pipetting or sorting. Mimicking real life situations is a challenge when producing sample material in a large scale. Only a limited set of pre-analytical problems can be investigated by utilizing this method (Type II), and for some areas it seems natural to perform the EQAS once or on an irregular basis. One should also take into consideration the bias introduced if the participants know they are to receive a sample with an interfering substance (participating in a pre-analytical scheme), and it might therefore be better to contaminate samples in a regular EQA scheme.

Type III: Registration of errors/adverse events

EQAS for the pre-analytical phase can be performed by initiating registration of defined and standardized types of errors (or adverse events). The error rate (or the rate of adverse events) during a pre-specified time interval should then be reported to the EQA organization. Systematic registering of incidents may point out bottle necks and indicate which areas are most error-prone (32). An accredited laboratory must have a system for registration of incidents and are required to establish QIs as a measure of the laboratory’s performance (ISO 15189) (24). Utilizing this system in an EQA program (e.g. the EQA provider suggest QIs to the laboratories; the laboratories thereafter report their QI data to the EQA provider, making comparison between laboratories regarding QI performances possible) is perhaps the best EQA program for monitoring pre-analytical (and post-analytical) errors. The QIs found most appropriate should also be harmonized between EQA organizers.

The participants should receive a feedback report showing their own QI performance compared to the results of all participants and to desirable quality specifications. It should also include historical data showing the development of the performance of the laboratory’s QIs.

In 1989, the College of American Pathologists (CAP) started the Q-Probe program, carried out as time-limited studies lasting 1-4 months, to define critical performance measures in laboratory medicine, describe error rates on these measures and provide suggestions to decrease the error rate (33,34). In 1998, CAP developed this concept further and started the ongoing Q-Track program (33) (Table 1). Data is collected according to defined methods and time frames by utilizing standardized forms. CAP provides a quarterly “Performance Management Report” package which helps the laboratories to identify issues providing the opportunity for improvement and to monitor the effect of changes. So far, information on error rates from more than 130 worldwide inter-laboratory studies has been included in CAP’s databases (34). The error rate has been determined for almost all the important steps of the total testing process, QIs have been established, and suggestions for error reduction provided. Improvement can be quantified as exemplified by one of the most frequently occurring errors; missing wristbands, where the median error rate was reduced from more than 4% to less than 1% during four years (35). Similarly, participants who participated for two years demonstrated a significant declining trend in sample rejection rate (36).

The Spanish Society of Clinical Chemistry and Molecular Pathology (SEQC) has since 1998 conducted two EQAS per year, registering rejections of samples and the causes for rejections (Table 1). A retrospective analysis of 10 surveys (2001-2005) summarized and evaluated the data and concluded that “specimen lost or not received” (37.5%), “hemolysis” (29.3%) and “clotted samples” (14.4%), were the main causes for sample rejection (37). The percentage of sample rejections was highest if the samples were collected in wards outside the central laboratory. The retrospective evaluation of the program resulted in a simplified scheme as some of the included variables (e.g. type of serum tubes (gel, silicone or no separator), type of anticoagulant employed (liquid or solid), extraction procedure (with or without vacuum) and material employed (glass or plastic device)) turned out to be irrelevant.

The results from an EQAS performed by Australian chemical pathology laboratories, aiming at measuring transcription (defined as any instances where the data on individual request forms were not identical to the data entered into the laboratory’s computer system, e.g. patient identification, patient sex and age, patient ward location or address, tests requested and requesting doctor identification) and analytical errors, have been summarized (38). This study showed a large inter-laboratory variation in total error rates (5 to 46%). The maximum error rates detected were 39% and 26% for transcription and analytical errors, respectively. The study concluded that there is a need to establish broader quality assurance programs and performance requirements for the pre-analytical phase.

Several pilot EQA-schemes were performed during 2006-2009 throughout Australia and New Zealand, and the experiences from these surveys were used to make the current “Key Incident Monitoring and Management Systems Quality Assurance” (KIMMS QA) program (39) (Table 1). In this scheme, the participating laboratories are asked to register a subset of pre- and post-analytical (PAPA) incidents, which represents either the most frequent or the most serious incidents, or which represents incidents with the greatest opportunity for inter-laboratory benchmarking and improvement. The data from the participants is pooled to form a national frequency distribution of PAPA incidents, and each participating laboratory can compare their own results with this distribution. For 70% of all reported incidents, the root causes require interaction between the laboratory staff and other health care workers. In the last KIMMS EQA survey, the overall PAPA incident rate was 1.22% and the most frequent incident was inadequate patient or sample identification (0.28%) (39).

As a part of a project to reduce errors in laboratory testing, the IFCC Working Group on Laboratory errors and patient safety (WG-LEPS) aimed to develop a series of QIs, specially designed for clinical laboratories (40,41). The aim of the project is to provide a common framework and to establish a set of harmonized QIs which should cover all steps of the TTP. The group concluded that a model of QIs managed as an EQAS can serve as a tool to monitor and control the pre-, intra- and post-analytical activities. The QIs suggested by WG-LEPS were evaluated in a study performed during 2009 and 2011. The results showed that QIs in the analytical phase improved much more than the corresponding for the extra-analytical phases, probably because improvement in the extra-analytical phases may be more complex requiring close cooperation between the laboratory staff and the health care workers outside the laboratory (42). This finding indicates that measurement of errors alone will not reduce the error rates, but corrective actions which include cooperation, teamwork and firm follow-up on achievements are necessary.

In a recent study performed in a group of clinical laboratories in Catalonia (Spain), the results obtained for the QIs of key processes were analyzed over a five years period (2004-2008) (43). The objective was to determine the QI evolution, identify processes requiring corrective measures, and obtain robust performance specifications. Different indicators were evaluated according to efficiency and safety. The average yearly inter-laboratory median value for the different indicators over the five years period was used as the desirable specification for the indicators. The values obtained were transformed to the Six Sigma scale and processes with sigma values ≥ 4, were considered well controlled (44). The medians for most QIs were considered stable during the study period, however, if the sigma value was less than 4 the QIs were considered robust but subject to improvement. Examples of pre-analytic processes outside the laboratory that could be improved were “Total incidences in test requests” (sigma 3.4) and “Patient data missing” (sigma 3.4). Within the laboratory “Undetected requests with incorrect patient name” obtained the lowest sigma value (2.9). The method used in this study could also be performed as a regular EQA scheme for the pre-analytical phase.

Challenges regarding the use of QIs managed as an EQAS

The EQA organization should develop QIs and distribute registration systems to the laboratories, and this may be a quite labor intensive process. Harmonization is of uttermost importance, since variation in the methods used to register the errors might influence the results and make comparison between laboratories difficult. Harmonization of QIs may be difficult on an international basis if laboratory practices differ between regions, but harmonization within local areas should be possible. A challenge for the participating laboratories is the extra resources (time and personnel) needed to register their own errors. It is extremely important to focus on improving the system and not focus on each individual, since this might lead to under-reporting of errors. Most errors in laboratory medicine are classified as blameless and represent the accumulation of a number of contributing events, indicating the need to modify systems as opposed to disciplining individuals (32,45).

Conclusion

So far, very few EQA organizations have focused on the pre-analytical phase, compared to the analytical phase, even if the pre-analytical phase is more prone to errors. In this paper, three different types of pre-analytical EQAS are described, having somewhat different focus and different challenges regarding implementation. A combination of the three is probably necessary to be able to detect and monitor the wide range of errors occurring in the pre-analytical phase. It might be a good idea to start with pre-analytical EQA schemes nationally since they are easier to adapt to local conditions and results could be discussed on local users meetings. Based on the results of these schemes one could in co-operation with other countries develop international schemes aiming at harmonizing pre-analytical guidelines and QIs. Pre-analytical EQA schemes of type II will in many instances be size-restricted by the supply of EQA sample material, while type I and III might be more suitable for international cooperation through international EQA organizations (e.g. EQALM). Development of pre-analytical EQA schemes and publication of the results should be encouraged since information of such schemes and their ability to improve pre-analytical routines in the laboratories are scarce in the literature.

Notes

Potential conflict of interest

None declared.

References

1. Blumenthal D. The errors of our way. Clin Chem 1997;43: 1305.

2. Bonini P, Plebani M, Ceriotti F, Rubboli F. Errors in laboratory medicine. Clin Chem 2002;48:691-8.

3. Lapworth R, Teal TK. Laboratory blunders revisited. Ann Clin Biochem 1994;31:78-84.

9. Plebani M, Carraro P. Mistakes in a stat laboratory: types and frequency. Clin Chem 1997;43:1348-51.

10. Rakha EA, Clark D, Chohan BS, El-Sayed M, Sen S, Bakowski L, et al. Efficacy of an incident-reporting system in cellular pathology: a practical experience. J Clin Pathol 2012;65:643-8.

http://dx.doi.org/10.1136/jclinpath-2011-200453.

11. Stahl M, Lund ED, Brandslund I. Reasons for a laboratory’s inability to report results for requested analytical tests. Clin Chem 1998;44:2195-7.

12. Witte DL, VanNess SA, Angstadt DS, Pennell BJ. Errors, mistakes, blunders, outliers, or unacceptable results: how many? Clin Chem 1997;43:1352-6.

13. Astion ML, Shojania KG, Hamill TR, Kim S, Ng VL. Classifying laboratory incident reports to identify problems that jeopardize patient safety. Am J Clin Pathol 2003;120:18-26.

http://dx.doi.org/10.1309/8U5D0MA6MFH2FG19.

18. Clinical and Laboratory Standards Institute (CLSI). Development and Use of Quality Indicators for Process Improvement and Monitoring of Laboratory Quality; Proposed Guideline. CLSI document GP35-P. Clinical and Laboratory Standards Institute, West Valley Road, Wayne, Pennsylvania US. 2009.

19. Fraser CG, Kallner A, Kenny D, Petersen PH. Introduction: strategies to set global quality specifications in laboratory medicine. Scand J Clin Lab Invest 1999;59:477-8.

http://dx.doi.org/10.1080/00365519950185184.

21. Hickner J, Graham DG, Elder NC, Brandt E, Emsermann CB, Dovey S, et al. Testing process errors and their harms and consequences reported from family medicine practices: a study of the American Academy of Family Physicians National Research Network. Qual Saf Health Care 2008;17:194-200.

http://dx.doi.org/10.1136/qshc.2006.021915.

24. International Organization for Standardization. ISO 15189: medical laboratories: particular requirements for quality and competence. Geneva, Switzerland: International Organization for Standardization; 2012.

25. External quality Control of diagnostic Assays and Tests (ECAT). Available at:

http://www.ecat.nl/. Acessed November 4, 2013.

26. Aarsand AK, Villanger JH, Stole E, Deybach JC, Marsden J, To-Figueras J, et al. European specialist porphyria laboratories: diagnostic strategies, analytical quality, clinical interpretation, and reporting as assessed by an external quality assurance program. Clin Chem 2011;57:1514-23.

http://dx.doi.org/10.1373/clinchem.2011.170357.

27. Bilic-Zulle L, Simundic AM, Smolcic VS, Nikolac N, Honovic L. Self reported routines and procedures for the extra-analytical phase of laboratory practice in Croatia - cross-sectional survey study. Biochem Med 2010;20:64-74.

http://dx.doi.org/10.11613/BM.2010.008.

28. The Nordic committee for External Quality Assurance Programmes in Laboratory Medicine (NQLM). Nordic interference study 2000 (Lipid) and 2002 (Hemoglobin and Bilirubin). Available at:

http://www.nkk-ekv.com/84240411. Acessed November 4, 2013.

29. Gunther K, Malentacchi F, Verderio P, Pizzamiglio S, Ciniselli CM, Tichopad A, et al. Implementation of a proficiency testing for the assessment of the preanalytical phase of blood samples used for RNA based analysis. Clin Chim Acta 2012;413:779-86.

http://dx.doi.org/10.1016/j.cca.2012.01.015.

30. Malentacchi F, Pazzagli M, Simi L, Orlando C, Wyrich R, Hartmann CC, et al. SPIDIA-DNA: An External Quality Assessment for the pre-analytical phase of blood samples used for DNA-based analyses. Clin Chim Acta 2013;424:274-86.

http://dx.doi.org/10.1016/j.cca.2013.05.012.

31. Pazzagli M, Malentacchi F, Simi L, Orlando C, Wyrich R, Gunther K, et al. SPIDIA-RNA: first external quality assessment for the pre-analytical phase of blood samples used for RNA based analyses. Methods 2013;59:20-31.

http://dx.doi.org/10.1016/j.ymeth.2012.10.007.

32. Reason J. Safety in the operating theatre - Part 2: human error and organisational failure. Qual Saf Health Care 2005;14:56-60.

33. Lawson NS, Howanitz PJ. The College of American Pathologists, 1946-1996. Quality Assurance Service. Arch Pathol Lab Med 1997;121:1000-8.

34. Howanitz PJ. Errors in laboratory medicine: practical lessons to improve patient safety. Arch Pathol Lab Med 2005;129:1252-61.

35. Howanitz PJ, Renner SW, Walsh MK. Continuous wristband monitoring over 2 years decreases identification errors: a College of American Pathologists Q-Tracks Study. Arch Pathol Lab Med 2002;126:809-15.

36. Zarbo RJ, Jones BA, Friedberg RC, Valenstein PN, Renner SW, Schifman RB, et al. Q-tracks: a College of American Pathologists program of continuous laboratory monitoring and longitudinal tracking. Arch Pathol Lab Med 2002;126:1036-44.

37. Alsina MJ, Alvarez V, Barba N, Bullich S, Cortes M, Escoda I, et al. Preanalytical quality control program - an overview of results (2001-2005 summary). Clin Chem Lab Med 2008;46:849-54.

http://dx.doi.org/10.1515/CCLM.2008.168.

38. Khoury M, Burnett L, Mackay MA. Error rates in Australian chemical pathology laboratories. Med J Aust 1996;165:128-30.

39. Quality Assurance Scientific and Education Committee (QASEC) of the Royal College of Pathologists of Australasia (RCPA). The Key Incident Monitoring & Management Systems (KIMMS). Available at:

http://dataentry.rcpaqap.com.au/kimms/. Accessed November 4, 2013.

40. Sciacovelli L, O’Kane M, Skaik YA, Caciagli P, Pellegrini C, Da Rin G, et al. Quality Indicators in Laboratory Medicine: from theory to practice. Preliminary data from the IFCC Working Group Project “Laboratory Errors and Patient Safety”. Clin Chem Lab Med 2011;49:835-44.

http://dx.doi.org/10.1515/CCLM.2011.128.

42. Sciacovelli L, Sonntag O, Padoan A, Zambon CF, Carraro P, Plebani M. Monitoring quality indicators in laboratory medicine does not automatically result in quality improvement. Clin Chem Lab Med 2012;50:463-9.

http://dx.doi.org/10.1515/cclm.2011.809.

43. Llopis MA, Trujillo G, Llovet MI, Tarres E, Ibarz M, Biosca C, et al. Quality indicators and specifications for key analytical-extranalytical processes in the clinical laboratory. Five years’ experience using the Six Sigma concept. Clin Chem Lab Med 2011;49:463-70.

http://dx.doi.org/10.1515/CCLM.2011.067.

44. Westgard JO, Westgard SA. The quality of laboratory testing today: an assessment of sigma metrics for analytic quality using performance data from proficiency testing surveys and the CLIA criteria for acceptable performance. Am J Clin Pathol 2006;125:343-54.

45. Thomas EJ, Sherwood GD, Helmreich RL. Lessons from aviation: teamwork to improve patient safety. Nurs Econ 2003;21:241-3.